- #Cudalaunch slow how to#

- #Cudalaunch slow install#

- #Cudalaunch slow driver#

- #Cudalaunch slow windows 10#

- #Cudalaunch slow code#

This is different from using cudaEvents (CUevent). Timing measurement is as close as possible to the first instruction to the the completion of the kernel. However, when you collect trace information the profilers are minimally invasive. When performing kernel profiling the profilers do call equivalent of cudaDeviceSynchronize between launches as the profilers have to collect the PM counters. Nvprof, CUPTI, Visual Profiler, and Nsight VSE CUDA profiler do not set CUDA_LAUNCH_BLOCKING. If you call cudaEventQuery(0) you can cause an early submission of the buffer. If youre using a mobile data connection then make sure it is fast enough to load the video seamlessly.

#Cudalaunch slow driver#

On WDDM the CUDA driver does not submit the work to the GPU until you exceed a set size in bytes of commands to the GPU or until you request the work to complete.

#Cudalaunch slow code#

You may see higher times on a slower laptop operating in battery mode.īTW, the GTX 750 Ti is an sm_50 device, you probably would want to build native code for that architecture rather than sm_30 to avoid any possible overhead caused by JIT compilation (although in my understanding that overhead should be incurred at CUDA context initialization time). The above are timings I observed on modern fast Ivybridge and Haswell based desktop machines with PCIe3 interface. Note that the performance of the host system has some influence on the launch overhead. In a nutshell, if driver overhead is important for your use case, you would want to use either Linux or a professional GPU with TCC driver under Windows. I have not performed a test under the WDDM driver model with many bound textures, but it stands to reason that the overall overhead per texture added will remain significantly higher than what is seen with the Linux driver, or the TCC driver on Windows for that matter (which is a non-graphics driver that can operate without much OS interference). You are observing the effects of (1) much higher overall overhead in the WDDM driver model, (2) launch batching in the CUDA driver making the execution times uneven, but reduced overall and average WDDM overhead. Add back the call to cudaDeviceSynchronize (but still using null kernel) and some kernel executions will clock in at significantly above 100 usec. Average across many launches maybe 20 usec. From 10 usec to 80 usec for the launch only, that is, without a call to cudaDeviceSynchronize(). CudaLaunch is an application for iOS, Android, Windows, and macOS created for remote workers requiring secure and reliable access to your business. If you now repeat the experiment on Windows, with the WDDM driver model (which is what you are stuck with when using a consumer GPU) you will see something like this: Already with a null kernel, timing is all over the place. If you then make the kernel launch more complex by adding bound textures, and time the increment due to each additional texture, I think you will find pretty much the timing stated in the blog article. On a Linux system, with a modern CUDA version, using a null kernel, you would find that each launch takes about 5 usec, and 20 usec with the cudaDeviceSynchronize() added back in. The macOS version is available in the macOS App.

#Cudalaunch slow windows 10#

The Windows version is available from the Microsoft Windows 10 App Store.

#Cudalaunch slow install#

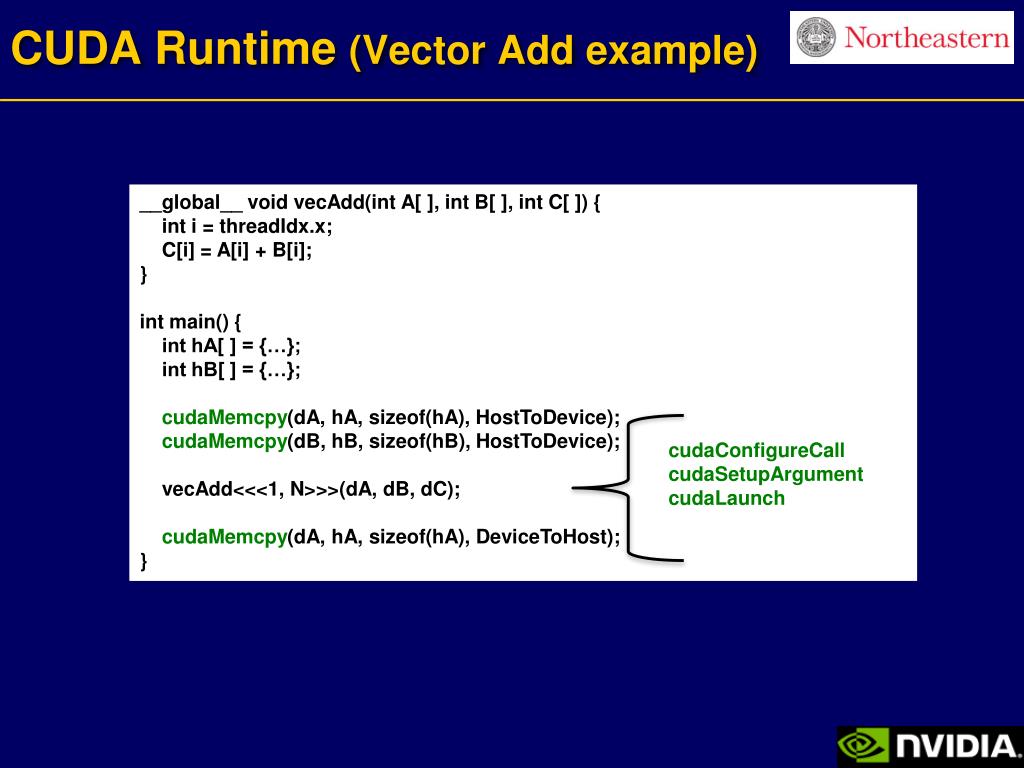

Download and Install CudaLaunch Download and install CudaLaunch on your device. For more information, see CloudGen Firewall Conguration for CudaLaunch. You can leave out the cudaDeviceSynchronize() after the kernel is you want. Congure the services and features you want to use in CudaLaunch. The timing numbers I’m getting are from the MSVC CUDA profiler. The main loop is running around 8 kernels, and all but one of them are very simple. What else might cause kernel launches to be this slow? I’m in Windows 7, with a Geforce 750 Ti on 347.88 drivers. There are around 20-30 of those, but they’re not used by the small kernels that are being called a lot.) I have about 30 textures, which would account for about 15uS, which doesn’t take me anywhere near the 150uS I’m seeing. The numbers I’ve heard are on the order of 5uS plus about 0.5uS per texture/surface. Launching kernels is relatively expensive, but it sounds like this is an order of magnitude slower than it should be. This takes the smaller kernel launches and makes them much slower than they should be. I have edited the saxpy.I’m profiling a slow application, and I’m seeing that every kernel launch’s cudaLaunch call is taking around 150-200uS.

#Cudalaunch slow how to#

Unfortunately, compilation fails and I don’t know how to debug. This code is some university assignment that I am trying out. I am compiling some code that runs saxpy on GPU.

0 kommentar(er)

0 kommentar(er)